Serializable

Information about the SSI implementation for the SERIALIZABLE transaction isolation level in PostgreSQL, new in release 9.1.

Overview

With true serializable transactions, if you can show that your transaction will do the right thing if there are no concurrent transactions, it will do the right thing in any mix of serializable transactions or be rolled back with a serialization failure.

This document is oriented toward the techniques used to implement the feature in PostgreSQL. For information oriented toward application programmers and database administrators, see the SSI Wiki page. Another description of SSI and how PostgreSQL's implementation differs from previous work is available in our paper Serializable Snapshot Isolation in PostgreSQL from VLDB 2012[5].

Serializable and Snapshot Transaction Isolation Levels

Serializable transaction isolation is attractive for shops with active development by many programmers against a complex schema because it guarantees data integrity with very little staff time -- if a transaction can be shown to always do the right thing when it is run alone (before or after any other transaction), it will always do the right thing in any mix of concurrent serializable transactions. Where conflicts with other transactions would result in an inconsistent state within the database or an inconsistent view of the data, a serializable transaction will block or roll back to prevent the anomaly. The SQL standard provides a specific SQLSTATE for errors generated when a transaction rolls back for this reason, so that transactions can be retried automatically.

Before version 9.1, PostgreSQL did not support a full serializable isolation level. A request for serializable transaction isolation actually provided snapshot isolation. This has well known anomalies which can allow data corruption or inconsistent views of the data during concurrent transactions; although these anomalies only occur when certain patterns of read-write dependencies exist within a set of concurrent transactions. Where these patterns exist, the anomalies can be prevented by introducing conflicts through explicitly programmed locks or otherwise unnecessary writes to the database. Snapshot isolation is popular because performance is better than serializable isolation and the integrity guarantees which it does provide allow anomalies to be avoided or managed with reasonable effort in many environments.

Serializable Isolation Implementation Strategies

Techniques for implementing full serializable isolation have been published and in use in many database products for decades. The primary technique which has been used is Strict Two-Phase Locking (S2PL), which operates by blocking writes against data which has been read by concurrent transactions and blocking any access (read or write) against data which has been written by concurrent transactions. A cycle in a graph of blocking indicates a deadlock, requiring a rollback. Blocking and deadlocks under S2PL in high contention workloads can be debilitating, crippling throughput and response time.

A new technique for implementing full serializable isolation in an MVCC database appears in the literature beginning in 2008[1][2]. This technique, known as Serializable Snapshot Isolation (SSI) has many of the advantages of snapshot isolation. In particular, reads don't block anything and writes don't block reads. Essentially, it runs snapshot isolation but monitors the read-write conflicts between transactions to identify dangerous structures in the transaction graph which indicate that a set of concurrent transactions might produce an anomaly, and rolls back transactions to ensure that no anomalies occur. It will produce some false positives (where a transaction is rolled back even though there would not have been an anomaly), but will never let an anomaly occur. In the two known prototype implementations, performance for many workloads (even with the need to restart transactions which are rolled back) is very close to snapshot isolation and generally far better than an S2PL implementation.

Apparent Serial Order of Execution

One way to understand when snapshot anomalies can occur, and to visualize the difference between the serializable implementations described above, is to consider that among transactions executing at the serializable transaction isolation level, the results are required to be consistent with some serial (one-at-a-time) execution of the transactions[4]. How is that order determined in each?

In S2PL, each transaction locks any data it accesses. It holds the locks until committing, preventing other transactions from making conflicting accesses to the same data in the interim. Some transactions may have to be rolled back to prevent deadlock. But successful transactions can always be viewed as having occurred sequentially, in the order they committed.

With snapshot isolation, reads never block writes, nor vice versa, so more concurrency is possible. The order in which transactions appear to have executed is determined by something more subtle than in S2PL: read/write dependencies. If a transaction reads data, it appears to execute after the transaction that wrote the data it is reading. Similarly, if it updates data, it appears to execute after the transaction that wrote the previous version. These dependencies, which we call "wr-dependencies" and "ww-dependencies", are consistent with the commit order, because the first transaction must have committed before the second starts. However, there can also be dependencies between two *concurrent* transactions, i.e. where one was running when the other acquired its snapshot. These "rw-conflicts" occur when one transaction attempts to read data which is not visible to it because the transaction which wrote it (or will later write it) is concurrent. The reading transaction appears to have executed first, regardless of the actual sequence of transaction starts or commits, because it sees a database state prior to that in which the other transaction leaves it.

Anomalies occur when a cycle is created in the graph of dependencies: when a dependency or series of dependencies causes transaction A to appear to have executed before transaction B, but another series of dependencies causes B to appear before A. If that's the case, then the results can't be consistent with any serial execution of the transactions.

SSI Algorithm

Serializable transaction in PostgreSQL are implemented using Serializable Snapshot Isolation (SSI), based on the work of Cahill, et al. Fundamentally, this allows snapshot isolation to run as it has, while monitoring for conditions which could create a serialization anomaly.

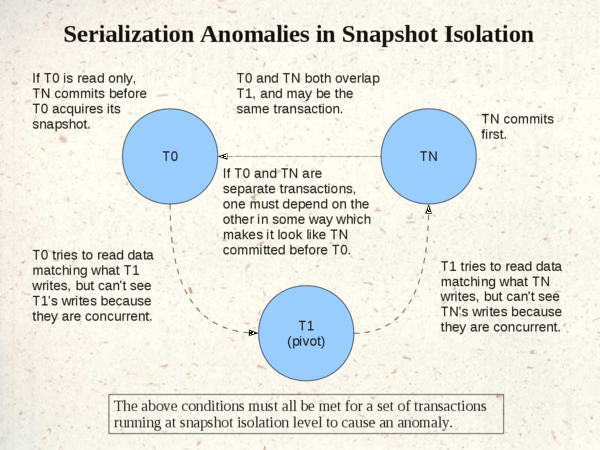

SSI is based on the observation[2] that each snapshot isolation anomaly corresponds to a cycle that contains a "dangerous structure" of two adjacent rw-conflict edges:

- Tin ----rw---> Tpivot ----rw---> Tout

SSI works by watching for this dangerous structure, and rolling back a transaction when needed to prevent any anomaly. This means it only needs to track rw-conflicts between concurrent transactions, not wr- and ww-dependencies. It also means there is a risk of false positives, because not every dangerous structure corresponds to an actual serialization failure.

The PostgreSQL implementation uses two additional optimizations:

- Tout must commit before any other transaction in the cycle (see proof of Theorem 2.1 of [2]). We only roll back a transaction if Tout commits before Tpivot and Tin.

- if Tin is read-only, there can only be an anomaly if Tout committed before Tin takes its snapshot. This optimization is an original one. Proof:

- Because there is a cycle, there must be some transaction T0 that precedes Tin in the serial order. (T0 might be the same as Tout).

- The dependency between T0 and Tin can't be a rw-conflict, because Tin was read-only, so it must be a ww- or wr-dependency. Those can only occur if T0 committed before Tin started.

- Because Tout must commit before any other transaction in the cycle, it must commit before T0 commits -- and thus before Tin starts.

PostgreSQL Implementation

Notable aspects of the PostgreSQL implementation of SSI include:

- Since this technique is based on Snapshot Isolation (SI), those areas in PostgreSQL which don't use SI can't be brought under SSI. This includes system tables, temporary tables, sequences, hint bit rewrites, etc. SSI can not eliminate existing anomalies in these areas.

- Any transaction which is run at a transaction isolation level other than SERIALIZABLE will not be affected by SSI. If you want to enforce business rules through SSI, all transactions should be run at the SERIALIZABLE transaction isolation level, and that should probably be set as the default.

- If all transactions are run at the SERIALIZABLE transaction isolation level, business rules can be enforced in triggers or application code without ever having a need to acquire an explicit lock or to use SELECT FOR SHARE or SELECT FOR UPDATE.

- Those who want to continue to use snapshot isolation without the additional protections of SSI (and the associated costs of enforcing those protections), can use the REPEATABLE READ transaction isolation level. This level retains its legacy behavior, which is identical to the old SERIALIZABLE implementation and fully consistent with the standard's requirements for the REPEATABLE READ transaction isolation level.

- Performance under this SSI implementation will be significantly improved if transactions which don't modify permanent tables are declared to be READ ONLY before they begin reading data.

- Performance under SSI will tend to degrade more rapidly with a large number of active database transactions than under less strict isolation levels. Limiting the number of active transactions through use of a connection pool or similar techniques may be necessary to maintain good performance.

- Any transaction which must be rolled back to prevent serialization anomalies will fail with SQLSTATE 40001, which has a standard meaning of "serialization failure".

- This SSI implementation makes an effort to choose the transaction to be cancelled such that an immediate retry of the transaction can not fail due to conflicts with exactly the same transactions. Pursuant to this goal, no transaction is cancelled until one of the other transactions in the set of conflicts which could generate an anomaly has successfully committed. This is conceptually similar to how write conflicts are handled.

- Modifying a heap tuple creates a rw-conflict with any transaction that holds a SIREAD lock on that tuple, or on the page or relation that contains it.

- Inserting a new tuple creates a rw-conflict with any transaction holding a SIREAD lock on the entire relation. It doesn't conflict with page-level locks, because page-level locks are only used to aggregate tuple locks. Unlike index page locks, they don't lock "gaps" on the page.

Current Status

Accepted as a feature for PostgreSQL 9.1!

Many thanks to Joe, Heikki, Jeff, and Anssi for posing questions and making suggestions which have led to improvements in the patch! Thanks to Markus for providing dtester at a critical juncture, which allowed progress to continue, and Heikki for developing the src/test/isolation code to move the dcheck tests into the main PostgreSQL testing framework. Also, thanks to the many who have participated in discussions along the way.

There are some features which should be considered for 9.2 once 9.1 is settled down; most notably integration with hot standby and fine-grained support for index AMs other than btree. Most other proposed work is related to possible performance improvements, which should each be carefully benchmarked before being accepted. At the top of that list is better optimization of de facto read only transactions -- those which aren't flagged as read only, but which don't actually do any writes to permanent database tables.

Development Path

In general, the approach taken was to try for the fastest possible implementation of a serializable isolation level which allowed no anomalies, even though it had many false positives and very poor performance, and then optimize until the rollback rate and overall performance were within a range which allows practical application. No existing isolation level was removed, since not everyone will want to pay the performance price for true serializable behavior. An important goal was that for those not using serializable transaction isolation, the patch doesn't cause performance regression.

Credits

Feature Authors: Kevin Grittner and Dan R. K. Ports.

Testing Support Authors: Markus Wanner (dtester used during most of development) and Heikki Linnakangas (testing support consistent with other PostgreSQL regression testing, so that we had a testing suite suitable for commit).

Reviewers: Joe Conway (warning elimination, bug chasing, and style comments), Jeff Davis (general review and found problems with GiST support and lack of 2PC support), Anssi Kääriäinen (found problems with conditional indexes and performance issue with sequential scans during testing with production data), YAMAMOTO Takashi (found numerous bugs during long and heavy testing), and Heikki Linnakangas (general review and many useful observations and suggestions, plus general improvements during commit process).

Committers: Joe Conway (initial comment and name changes), Heikki Linnakangas (the bulk of the patch and most follow-up fixes), and Robert Haas (some follow-up fixes).

Thanks to all those who participated in the on-list discussions and offered advice and support off-list. There were so many who contributed in this way it would be practically impossible to generate an accurate list, but Robert Haas stands out for offering great advice on an overall development strategy.

Special thanks to Emmanuel Cecchet for pointing out the ACM SIGMOD paper in which this technique was originally published[1], and to all those at the University of Sidney who contributed to the development of this innovative technique. This is what turned the discussion from wrangling over how best to document existing behavior toward changing it.

Source Code Management

A "serializable" git branch has been set up at this location:

git://git.postgresql.org/git/users/kgrittn/postgres.git

http://git.postgresql.org/git/users/kgrittn/postgres.git

ssh://git@git.postgresql.org/users/kgrittn/postgres.git

http://git.postgresql.org/gitweb?p=users/kgrittn/postgres.git;a=shortlog;h=refs/heads/serializable

Predicate Locking

Both S2PL and SSI require some form of predicate locking to handle situations where reads conflict with later inserts or with later updates which move data into the selected range. PostgreSQL didn't have predicate locking, so it needed to be added. Practical implementations of predicate locking generally involve acquiring locks against data as it is accessed, using multiple granularities (tuple, page, table, etc.) with escalation as needed to keep the lock count to a number which can be tracked within RAM structures. Coarse granularities can cause some false positive indications of conflict. The number of false positives can be influenced by plan choice.

Implementation overview

New RAM structures, inspired by those used to track traditional locks in PostgreSQL, but tailored to the needs of SIREAD predicate locking, will be used. These will refer to physical objects actually accessed in the course of executing the query, to model the predicates through inference. Anyone interested in this subject should review the Hellerstein, Stonebraker and Hamilton paper[3], along with the locking papers referenced from that and the Cahill papers[1][2].

Because the SIREAD locks don't block, traditional locking techniques must be modified. Intent locking (locking higher level objects before locking lower level objects) doesn't work with non-blocking "locks" (which are, in some respects, more like flags than locks).

A configurable amount of shared memory is reserved at postmaster start-up to track predicate locks. This size cannot be changed without a restart.

- To prevent resource exhaustion, multiple fine-grained locks may be promoted to a single coarser-grained lock as needed.

- An attempt to acquire an SIREAD lock on a tuple when the same transaction already holds an SIREAD lock on the page or the relation will be ignored. Likewise, an attempt to lock a page when the relation is locked will be ignored, and the acquisition of a coarser lock will result in the automatic release of all finer-grained locks it covers.

Heap locking

Predicate locks will be acquired for the heap based on the following:

- For a table scan, the entire relation will be locked.

- Each tuple read which is visible to the reading transaction will be locked, whether or not it meets selection criteria; except that there is no need to acquire an SIREAD lock on a tuple when the transaction already holds a write lock on any tuple representing the row, since a rw-dependency would also create a ww-dependency which has more aggressive enforcement and will thus prevent any anomaly.

Default index locking

There is a new ampredlocks flag in pg_am which should be set to false for any index which doesn't handle the predicate locking internally; indexes flagged this way will be predicate locked at the index relation level. Such a lock will conflict with any insert into the index, but will not conflict, for example, with deletes, HOT updates, or inserts which don't match the WHERE clause on an index (if present). This will allow correct behavior at the serializable transaction isolation level for new index types with minimal initial effort; but adding the predicate locking calls and changing the flag will improve performance in high contention workloads involving serializable transactions.

Index AM implementations

Since predicate locks only exist to detect writes which conflict with earlier reads, and heap tuple locks are acquired to cover all heap tuples actually read, including those read through indexes, the index tuples which were actually scanned are not of interest in themselves; we only care about their "new neighbors" -- later inserts into the index which would have been included in the scan had they existed at the time. Conceptually, we want to lock the gaps between and surrounding index entries within the scanned range.

Correctness requires that any insert into an index generate a rw-conflict with a concurrent serializable transaction if, after that insert, re-execution of any index scan of the other transaction would access the heap for a row not accessed during the previous execution. Note that a non-HOT update which expires an old index entry covered by the scan and adds a new entry for the modified row's new tuple need not generate a conflict, although an update which "moves" a row into the scan must generate a conflict. While correctness allows false positives, they should be minimized for performance reasons.

Several optimizations are possible:

- An index scan which is just finding the right position for an index insertion or deletion need not acquire a predicate lock.

- An index scan which is comparing for equality on the entire key for a unique index need not acquire a predicate lock as long as a key is found corresponding to a visible tuple which has not been modified by another transaction -- there are no "between or around" gaps to cover.

- As long as built-in foreign key enforcement continues to use its current "special tricks" to deal with MVCC issues, predicate locks should not be needed for scans done by enforcement code.

- If a search determines that no rows can be found regardless of index contents because the search conditions are contradictory (e.g., x = 1 AND x = 2), then no predicate lock is needed.

Other index AM implementation considerations:

- If a btree search discovers that no root page has yet been created, a predicate lock on the index relation is required; otherwise btree searches must get to the leaf level to determine which tuples match, so predicate locks go there.

- GiST searches can determine that there are no matches at any level of the index, so there must be a predicate lock at each index level during a GiST search. An index insert at the leaf level can then be trusted to ripple up to all levels and locations where conflicting predicate locks may exist.

- The effects of page splits, overflows, consolidations, and removals must be carefully reviewed to ensure that predicate locks aren't "lost" during those operations, or kept with pages which could get re-used for different parts of the index.

Testing

For this development effort to succeed, it was absolutely necessary to have some client application which allowed execution of test scripts with specific interleaving of statements run against multiple backends. The dtester module from Markus Wanner was used for this during most of development. It requires python and several python packages (including twisted). Due to package dependencies and licensing issues the dtester module was not appropriate for commit to the PostgreSQL code base.

Heikki Linnakangas developed a testing framework based on existing regression test code which has been committed to src/test/isolation. Besides being compatible with other PostgreSQL testing, it runs faster than dtester. It doesn't provide a nice display of the results by statement ordering permutation, but that can be added if needed by filtering the current output.

Like many other proposed features and optimizations, this area could benefit from a "performance test farm" so that serializable performance can be better compared to other isolation levels, and so the performance impact of future enhancements can be determined.

Documentation

A README-SSI file was created, largely drawn from this Wiki page.

Someone with update rights to Wikipedia should probably update references there which will be outdated with this feature:

- http://en.wikipedia.org/wiki/Snapshot_isolation

- http://en.wikipedia.org/wiki/Isolation_%28database_systems%29

Innovations

The PostgreSQL implementation of Serializable Snapshot Isolation differs from what is described in the cited papers for several reasons:

- PostgreSQL didn't have any existing predicate locking. It had to be added from scratch.

- The existing in-memory lock structures were not suitable for tracking SIREAD locks.

- The database products used for the prototype implementations for the papers used update-in-place with a rollback log for their MVCC implementations, while PostgreSQL leaves the old version of a row in place and adds a new tuple to represent the row at a new location.

- In PostgreSQL, tuple level locks are not held in RAM for any length of time; lock information is written to the tuples involved in the transactions.

- In PostgreSQL, existing lock structures have pointers to memory which is related to a connection. SIREAD locks need to persist past the end of the originating transaction and even the connection which ran it.

- PostgreSQL needs to be able to tolerate a large number of transactions executing while one long-running transaction stays open -- the in-RAM techniques discussed in the papers wouldn't support that.

- Unlike the database products used for the prototypes described in the papers, PostgreSQL didn't already have a true serializable isolation level distinct from snapshot isolation.

- PostgreSQL supports subtransactions -- an issue not mentioned in the papers.

- PostgreSQL doesn't assign a transaction number to a database transaction until and unless necessary.

- PostgreSQL has pluggable data types with user-definable operators, as well as pluggable index types, not all of which are based around data types which support ordering.

- Some possible optimizations became apparent during development and testing.

Differences from the implementation described in the papers are listed below.

- New structures needed to be created in shared memory to track the proper information for serializable transactions and their SIREAD locks.

- Because PostgreSQL does not have the same concept of an "oldest transaction ID" for all serializable transactions as assumed in the Cahill these, we track the oldest snapshot xmin among serializable transactions, and a count of how many active transactions use that xmin. When the count hits zero we find the new oldest xmin and run a clean-up based on that.

- Predicate locking in PostgreSQL will start at the tuple level when possible, with automatic conversion of multiple fine-grained locks to coarser granularity as need to avoid resource exhaustion. The amount of memory used for these structures will be configurable, to balance RAM usage against SIREAD lock granularity.

- A process-local copy of locks held by a process and the coarser covering locks with counts, are kept to support granularity promotion decisions with low CPU and locking overhead.

- Conflicts are identified by looking for predicate locks when tuples are written and looking at the MVCC information when tuples are read. There is no matching between two RAM-based locks.

- Because write locks are stored in the heap tuples rather than a RAM-based lock table, the optimization described in the Cahill thesis which eliminates an SIREAD lock where there is a write lock is implemented by the following:

- When checking a heap write for conflicts against existing predicate locks, a tuple lock on the tuple being written is removed.

- When acquiring a predicate lock on a heap tuple, we return quickly without doing anything if it is a tuple written by the reading transaction.

- Rather than using conflictIn and conflictOut pointers which use NULL to indicate no conflict and a self-reference to indicate multiple conflicts or conflicts with committed transactions, we use a list of rw-conflicts. With the more complete information, false positives are reduced and we have sufficient data for more aggressive clean-up and other optimizations.

- We can avoid ever rolling back a transaction until and unless there is a pivot where a transaction on the conflict *out* side of the pivot committed before either of the other transactions.

- We can avoid ever rolling back a transaction when the transaction on the conflict *in* side of the pivot is explicitly or implicitly READ ONLY unless the transaction on the conflict *out* side of the pivot committed before the READ ONLY transaction acquired its snapshot. (An implicit READ ONLY transaction is one which committed without writing, even though it was not explicitly declared to be READ ONLY.)

- We can more aggressively clean up conflicts, predicate locks, and SSI transaction information.

- Allow a READ ONLY transaction to "opt out" of SSI if there are no READ WRITE transactions which could cause the READ ONLY transaction to ever become part of a "dangerous structure" of overlapping transaction dependencies.

- Allow the user to request that a READ ONLY transaction wait until the conditions are right for it to start in the "opt out" state described above. We add a DEFERRABLE state to transactions, which is specified and maintained in a way similar to to READ ONLY. It is ignored for transactions which are not SERIALIZABLE and READ ONLY.

- When a transaction must be rolled back, we pick among the active transactions such that an immediate retry will not fail again on conflicts with the same transactions.

- We use the PostgreSQL SLRU system to hold summarized information about older committed transactions to put an upper bound on RAM used. Beyond that limit, information spills to disk. Performance can degrade in a pessimal situation, but it should be tolerable, and transactions won't need to be cancelled or blocked from starting.

R&D Issues

This is intended to be the place to record specific issues which need more detailed review or analysis.

- WAL file replay. While serializable implementations using S2PL can guarantee that the write-ahead log contains commits in a sequence consistent with some serial execution of serializable transactions, SSI cannot make that guarantee. While the WAL replay is no less consistent than under snapshot isolation, it is possible that under PITR recovery or hot standby a database could reach a readable state where some transactions appear before other transactions which would have had to precede them to maintain serializable consistency. In essence, if we do nothing, WAL replay will be at snapshot isolation even for serializable transactions. Is this OK? If not, how do we address it?

- External replication. Look at how this impacts external replication solutions, like Postgres-R, Slony, pgpool, HS/SR, etc. This is related to the "WAL file replay" issue.

- UNIQUE btree search for equality on all columns. Since a search of a UNIQUE index using equality tests on all columns will lock the heap tuple if an entry is found, it appears that there is no need to get a predicate lock on the index in that case. A predicate lock is still needed for such a search if a matching index entry which points to a visible tuple is not found.

- Minimize touching of shared memory. Should lists in shared memory push entries which have just been returned to the front of the available list, so they will be popped back off soon and some memory might never be touched, or should we keep adding returned items to the end of the available list?

Discussion

"Serializable Isolation without blocking" - discusses paper in ACM SIGMOD on SSI

"Update on true serializable techniques in MVCC" - discusses Cahill Doctoral Thesis on SSI

"Serializable implementation" - discusses Wisconsin Court System plans

"A third lock method" - discusses development path: rough prototype to refine toward production

"true serializability and predicate locking" - discusses GiST and GIN issues

WIP patch for serializable transactions with predicate locking

"serializable" in comments and names

Serializable Snapshot Isolation

serializable read only deferrable

SSI memory mitigation & false positive degradation

Presentations

From PostgreSQL Conference U.S. East 2010: Current Transaction Isolation in PostgreSQL and future directions

From PGCon 2011: Serializable Snapshot Isolation: Making ISOLATION LEVEL SERIALIZABLE Provide Serializable Isolation

From VLDB 2012: Serializable Snapshot Isolation in PostgreSQL

Publications

[1] Michael J. Cahill, Uwe Röhm, and Alan D. Fekete. 2008. Serializable isolation for snapshot databases. In SIGMOD ’08: Proceedings of the 2008 ACM SIGMOD international conference on Management of data, pages 729–738, New York, NY, USA. ACM. (This paper is listed mostly for context; the subsequent paper covers the same ground and more.)

[3] Joseph M. Hellerstein, Michael Stonebraker and James Hamilton. 2007. Architecture of a Database System. Foundations and Trends(R) in Databases Vol. 1, No. 2 (2007) 141–259. Of particular interest:

- 6.1 A Note on ACID

- 6.2 A Brief Review of Serializability

- 6.3 Locking and Latching

- 6.3.1 Transaction Isolation Levels

- 6.5.3 Next-Key Locking: Physical Surrogates for Logical Properties

[4] SQL-92 Search for serial execution to find the relevant section.