PGXN v2/Architecture

Introduction

This document outlines the project to build extension distribution, discovery, and packaging tools and services to power the growth, accessability, and utility of the Postgres extension ecosystem. Taking the overall Postgres community as its audience, it defines the services to be provided and the architecture to run them, as well as the strategic vision to guide project planning and decision-making.

With the goal to think strategically and plan pragmatically, this document describes the former to enable the latter. As such, it is necessarily high-level; details, scoping, and planning will be surfaced in more project-focused documents.

Bear in mind that this document outlines an ambitious, long-term strategy. If you're thinking that there's too much here, that we'er over-thinking and over-designing the system, rest assured that project execution will be fundamentally incremental and pragmatic. This document is the guiding light for the project, and subject to change as development proceeds and new wrinkles arise.

The overall philosophy governing this project is:

- Distributed, loosely-paired architecture of independent services

- Metadata-forward to empower novel, unanticipated services

- Broad availability for anyone to build on

- Comprehensive indexing to be the go-to for all publicly-available extensions

- Design first, but agilely adjust regularly

- Build in parallel where feasible

- Empower decoupled or lightly-coupled interactions for highly parallel development

- Usability and availability: Using the services and tools should be as straightforward and obviously useful as possible for extension developers, service designers, and extension users

Current Architecture

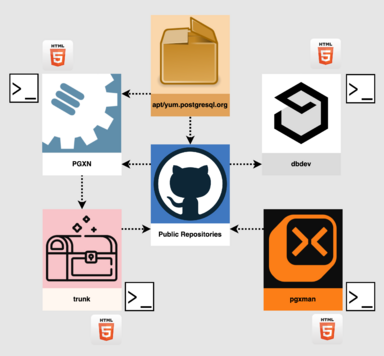

High-level diagram of state of the PostgreSQL extension distribution ecosystem as of early 2024. The various registries, including community and commercial packagers, largely do not interoperate, aside from fetching sources from PGXN or public repositories and some release pipelines that publish to PGXN and dbdev. An exception is a trunk POC that automatically pulls new releases from PGXN.

The extension ecosystem today consists of a variety of at-best loosely connected services that each aim to solve specific problems. These include:

- PGXN, intended to be the comprehensive source to find all extensions and download their source code. Unfortunately it indexes perhaps a third of known extensions, many of which have not been updated in years.

- The PostgreSQL community packaging registries, apt.postgresql.org and yum.postgresql.org. Each contains a subset of extensions, curated by community volunteers who manually script packaging spec files that download source code from a variety of sources, including PGXN and source code repositories like GitHub.

- dbdev, an open source registry for trusted language extensions, or TLEs, which developers publish via a CLI. This registry has no known connection to any other service, though public repositories may use release pipelines to publish TLEs.

- Various other binary packagers, notably the trunk and pgxman projects. Like the community registries, these packagers contain a curated subset of available extensions, are currently maintained manually, and provide binaries for a short list of OSes, architectures, and Postgres versions. The trunk road map reflects an ambition to become the default source for binary packages for all extensions on a much broader set of platforms. To that end it has implemented a proof-of-concept to automatically package new extension releases on PGXN.

These systems have arisen with little reference to each other, nor a comprehensive view of the overall extension ecosystem or a systematic approach to their roles in that system. Each neatly addresses the problems it set out to solve, with some overlap in functionality and similar if incompatible approaches to metadata management in particular.

Challenges

With this disaggregated and uncoordinated diversity of good-faith efforts, the Postgres extension ecosystem remains under-served and suffers from a number of challenges to long-term success. These include:

- Extensions are difficult to find and discover. There is no one, comprehensive, canonical home to find and learn about all publicly-available extensions.

- Extensions remain under-documented and hard to understand. With few exceptions, most ship with a brief if will-intentioned README and no reference documentation or tutorials.

- Extensions are challenging to configure and install. The vast majority use PGXS, make, configure, or pgrx, all of which require a build tools like a compiler. Most users don't otherwise need or want a compiler, just a simple command to install the binaries they need. Alas, binary registries provide quite small subsets of the universe of extensions on a limited number of platforms.

- The maturity of extension projects can be tough to gauge. Without a central repository with consistent, comparable stats, docs, and metadata, interested users must seek out the source for an extension (hard to find again!) and examine heuristics such as repository stars, commit history, and documentation quality, all of which can diverge wildly between competing projects.

- Developer tooling is generally arcane, difficult to learn, and under-documented. There is no one resource to help budding extension developers to learn the craft, automate their processes, and contribute to the broader community.

Future Architecture

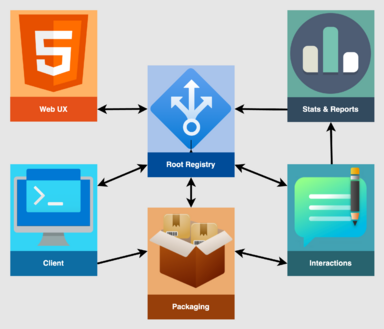

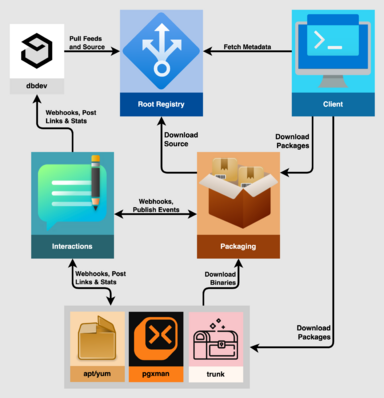

High-level diagram of the six logical services making up the proposed future extension distribution architecture. The Root Registry sits at the center, providing APIs for the other services to consume for their own use cases. Trusted instances of those services submit additional data about extensions via the Interactions service to enhance and enrich the service to better inform and delight users.

To address many of these challenges, over the next year this project will establish a distributed architecture of interacting services, clients, and tools to power the growth and accessability of the extension ecosystem of the future.

This architecture consists of six core systems, each a unit of well-defined capabilities that it "owns." These logical models define guidelines for how they interact, not necessarily physical entities or network diagrams. This document describes each below, defining the context in which it interoperates with other systems as well as the components expected to constitute the service.

Root Registry

The foundational services make up the Root Registry, which aims to be the comprehensive system of record for publicly-available extensions. Developers will publish extension source code to the registry and manage ownership and permissions via its API (using the CLI) or the Web UX.

APIs will provide all the functionality for the registry, allowing clients to search, read docs, download and install extensions, inspect data about other registry objects (users, classifications, etc.), and view or click through to read additional stats and reports.

Capabilities

- Storage Layer

- Root mirror

- Database

- Identity & Authentication API

- Registration

- Authentication

- Account Management

- User Administration

- API Authentication

- Authorization (access controls)

- Publishing API

- Release

- Validation

- Ownership/Permissions

- Indexing Service

- Doc generation

- Search indexing

- Other indexing (e.g., Atom feed)

- Informational API

- Metadata

- Download

- Search

- Docs

- Profiles (extension, user, classification)

- Stats and Reports (via by Interactions)

Context

The Root Registry provides the core functionality to enable the services defined below. In brief:

- The Web UX service provides a human-accessible interaction layer over the Root Registry

- The Client depends on the Root Registry for extension downloads and metadata, including binary package information, and can be used to publish new extensions

- The Packaging service depends on the Root Registry for source code and Interactions for notifications of new releases

- The Interactions service notifies registered downstream services of new releases via webhooks, and provides APIs for the submission of package links and Stats and Reports

- The Stats and Reports services provide additional services to enhance the value of extensions, may download sources and metadata from the Root Registry, and submit data back via Interactions to be displayed in the Web UX

Web UX

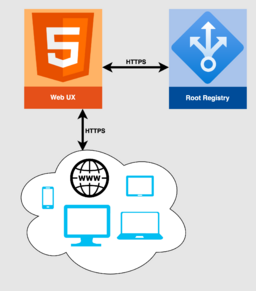

The web service provides the UX for users to use all of root registry APIs. It functions as a beautifully-designed interactive layer over the Root Registry, which provides all of the functionality. Its clients are web-based devices on the internet.

As Interactions add additional APIs, data, and features to the Root Registry, the web UX will be updated to display them.

The Web UX service need not be the only UX service. As a purely display layer over the Root Registry APIs, it sets the standard for user interactions, but others may build their own UX clients, such as native desktop or mobile apps.

Capabilities

- Search extensions and other entities

- Browse classifications

- Read documentation

- Browse source code

- Download source code

- Browse and view user profiles

- List recent releases

- Link to downstream binary packaging services

- Display third-party metadata (stats, badges, links, etc.)

- User registration and authentication

- Extension Administration (permissions, ownership)

- API token management (for users) and administration (for oversight)

- User administration and other administrative tasks

Context

The Web UX service depends on the Root Registry's APIs to provide all functionality (Figure 3). Although the web site should be beautifully designed, engaging, informative, and fun to use, it should provide no business logic of its own.

PGXN provides much of the inspiration for the Root Registry. Think of it as combining pgxn.org and manager.pgxn.org into a single site. PGXN itself will either evolve to assume the responsibilities of the Root Registry as defined here, or be modified to seamlessly interface with the Root Registry, such that new releases on PGXN also go to the Root Registry.

Interactions

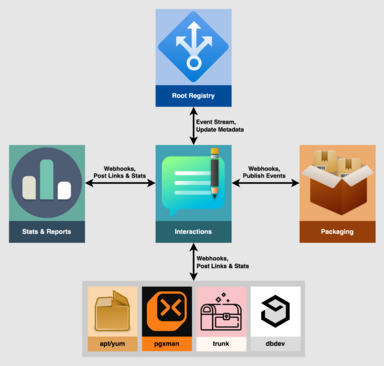

High-level diagram of the Interactions service in context with other services. The Root Repository publishes events to the Interactions service, which then forwards them to registered, trusted Packaging and Stats and Reports services via webhooks. Those services can also submit information back to the Interactions service, including packaging links, stats for aggregation, and other links and badges.

Downstream packagers can also subscribe to Interactions events to trigger their own builds.

The Root Registry will publish events to an event stream service, which will send them to webhooks for registered and approved client services, notably Packaging and Stats and Reports. Meanwhile, writeable APIs will allow trusted, authenticated clients to submit additional metadata, packaging information, reporting results, and stats to the registry.

The design of the Interactions service requires a detailed governance specification to determine what services will be trusted, what criteria they must meet, and the code of conduct and review cadence to determine whether access should be revoked. Technically speaking, it also requires a robust and auditable authentication and authorization system to ensure access controls are enforced, and that's straightforward to administer --- that is, to grant or revoke access.

Capabilities

- Event Stream

- Social posting

- Webhooks

- Write APIs

- Stats (downloads, repo stats)

- Reporting (test results, install results, security scans)

- Packaging

Context

The Interactions services functions as an extension of the Root Registry, managing event streaming and write APIs servicing trusted Packaging and Stats and Reports services. It uses that data to update the appropriate extension metadata managed and published by the Root Registry, to enhance and enrich extension data and its display via the Web UX, to better inform and delight users.

Client

The Client is a key component of the architecture, in that it aims to provide capabilities to empower:

- Users who want to find, install, and report on extensions and their dependencies on their systems

- Developers who need to scaffold new projects, manage extension metadata, build source and run tests on multiple platforms and Postgres versions, and publish new releases

- Packagers who might rely on its broad build pipeline and dependency management features to simplify packaging automation

- CI/CD automation

The goal is to build a robust, intuitive client that's easily installed on a vast array of OSes and platforms (e.g, single cross-compiled Go or Rust binary), and has an architecture to recognize and work with a broad matrix of configurations, including:

- OSes: Linux, macOS, *BSD, Windows, etc.

- Architectures: amd64, arm64, i386, etc.

- Processing capabilities: CPU, GPU, avx512, etc.

- PostgreSQL versions: 9.1 -- 17

- Packagers: apt.postgresql.org, yum.postgresql.org, trunk, dbdev, pgxman, etc.

- Build Pipelines: PGXS, pgrx, configure, make, cmake, etc.

- Extension types: SQL extensions, background workers, apps, loadable modules, etc.

The goal is for the client to be the default choice for developing and installing extensions on most platforms to use with most PostgreSQL distributions.

Capabilities

- User SDK

- List extensions

- Search extensions

- Determine environment (Postgres version, OS, architecture)

- Fetch the appropriate binary package for the configuration

- Resolve and install all extension, system, and library dependencies

- Install (or re-install) the package

- Build from source for all supported pipelines

- Validate an extension has been installed

- Confirm successful installation

CREATE EXTENSIONet al. in one or more databases- Use private registries as well as the Root Registry

- Report on installed extensions and their status (used/not used, out of date)

- Developer SDK

- Initialize projects

- Manage metadata

- Build and install for all supported pipelines

- Run Tests on different Postgres versions

- Bundle and Publish

- Publish to private registry

- CLI wrapper around SDKs

- Execute CI/CD tasks

- Support as many OSes as possible

- Easy distribution (single binary for each OS/arch)

Context

As shown in Figure 2 and Figure 4, the Client interacts with the Root Registry the Packaging Service, and potentially system and downstream packagers.

The Client relies the Root Registry's API to publish extensions, search extensions, and fetch metadata for extensions. Based on this metadata, it knows how to:

- Download, build, and install an extension and its dependencies

- Determine whether the Packaging service provides an appropriate binary and install it

- Evaluate the available binary packages from downstream packagers and choose the best one to install

This is why it's key for the Client to have a deep understanding of metadata, such that it can make metadata management as simple as possible for developers and make informed (yet easily overridden) decisions on behalf of users.

Each of these classes of metadata requires significant design and development. For building from source, the Client must recognize and understand how to work with a wide variety of build pipelines and dependency management systems. It also needs the capability to tweak configurations for each pipeline for finer control over extension installation, such as preventing some files from being installed (e.g. docs) and setting install locations (PGDATA and the various directories reported by pg_config: bindir, docdir, libdir, etc.), as required in particular for installing binaries from the Packaging service.

When working with downstream packagers, it needs to understand the system it manages so it can install the best possible package and all of its dependencies. Likely some sort of configuration can lock it to a particular packager, but at the same time it needs to be as simple as possible for someone to effortlessly find, download, and try an extension.

Fun Idea

Would it be possible to use the CLI SDK as a Postgres extension, so that one could do everything the client can do --- or at least install binary packages --- but via SQL function calls. See pginstall for a POC.

Packaging

High-level diagram of the Packaging Service context. The Packaging service relies on release events published to a webhook by the Interactions service to learn of new releases, and downloads source code from the Root Registry to build binaries. For each binary package it builds, it publishes an event to the Interactions service.

For such events, the Interactions can call webhooks for downstream packagers such as apt, yum, pgxman, and trunk. These services can then download binaries from Packaging and bundle them into packages for their specific packaging systems and post links back to Interactions.

Alternatively, as modeled by dbdev a downstream packager might listen for webhook events for new releases (or rely on a feed), download source code from the Root Registry, and bundle extensions from source. They also can post links back to Interactions.

The Packaging service listens for webhook calls or feed updates to download, build, and package new releases for various platforms. Ideally it will support a wide variety of OSes, OS versions, Postgres versions, and architectures through effective use of VMs or containers. Its packages will simply contain the extension files to install, and the client will know how to install them into a given cluster.

The resulting repository of tarballs (or zip files; see the Binary distribution format for inspiration) will function as the default installation source for the client. Other packagers, however, can depend on either the Packaging service or the Root Registry to build their packages.

These downstream packagers can be notified of new source releases or binary builds via Interactions, and trusted services also submit packaging links, as well as periodic download stats where available.

This architecture aims to empower any number of entities to create and maintain binary extension packaging repositories. We expect that no single service could meet the needs of every available OS, platform, hardware architecture, or version of PostgreSQL, though the Packaging service itself aims to cover the 90% of modern use cases.

Capabilities

- Architecture to manage build nodes

- Webhook to listen for new releases

- Queue builds to build nodes (VMs or containers) for Linux, macOS and Windows, 2-3 recent OS versions, and 64-bit architectures

- Support for building against multiple versions of Postgres, at least those still supported by the core

- Client to download source, build binaries, assemble metadata (including system-specific package and library dependencies) and bundle into a zip file

- Public key signing for the zip files

- Post results to Interactions so the binary support can be listed in the Root Registry

- Repository of zip files for download

- Mirroring (rsync?)

- Protocol for third parties to build their own packaging registries that the client can use transparently

- Binary build events to be consumed by downstream packagers

We expect the that overall architecture will allow anyone to create their own registries and automatically build packages from binaries created by the Packaging service or sources released on the Root Registry. In this way, the benefit of extensions may be extended to a far broader array of OSes, platforms and architectures than would otherwise be possible.

Context

The Packaging service will be notified of new extension releases by webhook callback from the Interactions service.

On a release event, Packaging will download source code from the Root Registry to build on each of its build nodes. Those nodes will also rely on the Client SDK's broad support for build pipelines to build extensions. Once an extension is built, it will collect the relevant files (control files, SQL scripts, dynamic library files, and docs), along with a manifest describing the files and the binary configuration (OS version, Postgres version, architecture, configuration requirements (e.g. shared_preload_libraries), system and library dependencies, etc.) into a zip archive.

For each binary package thus created, it will publish an event to the Interactions service. Downstream packagers (e.g., yum, apt, pgxman, Homebrew, Chocolatey, etc.) may subscribe to these events to build their own packages from the binaries created by the Packaging service. Or they might subscribe to the new release events and build from source.

Either way, using the metadata for the extension, they can automatically build and register binary packages. Once packages have been published, trusted packaging clients can post links to them back to the Interactions service, which will then update metadata so the links appear on the extension's Web UX page.

The Client also benefits from these links, as it then can select the best available package to install on a given system. In other words, if the client is asked to install an extension into a community Apt-based Postgres cluster, it can select the community Apt package for the extension. For clusters with an indeterminate source (e.g., built from source), it will default to install binaries from the Packaging service or, if the user wants, from source downloaded from the Root Registry.

Trusted Packagers can also periodically post stats updates, including download counts, install successes and failures, etc., to the Interactions service, which aggregations these stats in extension metadata for display on the site or by clients.

Stats and Reports

High-level diagram of the Stats and Reporting Service context. The Stats and Reporting service rely on release notifications --- webhooks from Interactions or feeds from the Root Registry --- to perform tasks for new releases. Each Stats and Reporting service does its own thing, perhaps just for new events or on a regular cadence, collecting stats about an extension. Trusted services can then post stats, links, badges, etc. via Interactions.

Stats and Reporting Services do things with extensions and then report the results. Trusted instances can submit stats, links, badges, and the links to the Interactions service, to be displayed via the Web UX and other clients.

Stats and Reporting is deliberately a super open category that just means "services that do stuff with extensions". What that stuff might be is unspecified. Here are some ideas:

- A service that announces new releases by posting to social media sites, email lists, Slack channels, etc.

- A service that periodically reports repository stats for extensions, such as stars, issue count, pull count, number of contributors, etc. Updating these stats for display in the Web UX helps centralize the evaluation of extension quality.

- A service that downloads extensions for each release and smoke tests them on a variety of OSes and Postgres versions and builds a matrix reporting the results on the web. It might submit simple stats (pass/fail/skip counts), a badge indicating the overall result, and a link back to the matrix page.

- A variant of the smoke testing service that tests upgrades from earlier versions and reports stats for successes and failures and produces diffs for the changes.

- A service that allows users to rate extensions or write reviews. It might submit a link to the discussion along with some stats like average rating or comment count. This can help users evaluate the quality of an extension.

- A service that downloads extensions for each release and scans them for security vulnerabilities. It might submit some basic stats (advisory counts, quality badge) and a link to a deeper assessment. It could even be a paid service, charging customers for access more information, or providing a private registry with only low-vulnerability extensions.

- A service that curates and classifies extensions according to its own priorities and systems. It might submit back the main classification term, or social up/down votes for classifications from users.

For the purposes of this project, we propose to implement the first two of these examples: A social media announcement service and a source repository stats aggregator. These two services both provide a high level of quality signal that's important for people to learn of new releases and assess developer activity, as well as proving the model and setting examples for others to follow.